Kubic:Elastic Inference Demo

Elastic Inference Demo for openSUSE Kubic

This Demo provides cloud native model of an inference pipeline. The inference workload can be scaled vertically on heterogeneous hardware engine and horizontal POD autoscaler. This allows to have diverse inference model size, diverse input source, etc.

This Demo is created as eye-catcher on conferences, please do not use in any production environment. It's based on the Elastic Inference Demo for Clear Linux. The Design and Architecture are explained on that project very well, too.

Micro Services

This Demo consists of several micro services. The corresponding containers are:

- ei-file-stream-service

- ei-inference-service

- ei-websocket-server

- ei-gateway-server

The workflow is:

Input Source

This could be a file or camera stream service. Different inference queues can have more than one input source. A frame queue service at <k8s cluster IP>:31003 accepts any external frame producer, e.g. from IP cameras.

Frame Queue

The frames are pushed into several frame queues according to inference type. Redis is used for this.

Openvino Inference Engine

The ei-inference-service is mainly based on the Deep Learning Deployment Toolkit (dldt) and Open Source Computer Vision Library (OpenCV) from the OpenVINO™ Toolkit. It picks up individual frames from the stream queue then do inference.

The inference service can be used for any recognition or detection, as long as the corresponding models are provided.

The following models are used in this demo:

- car detection: person-vehicle-bike-detection-crossroad-0078

- face detection: face-detection-retail-0005

- people/body detection: person-detection-retail-0013

Stream Broker

The stream broker is the same redis instance as the frame queue service and gets the inference result for further actions.

Stream Websocket Server

This server subscribes all resulting streams from broker and setup individual websocket connection for each inference result stream.

Gateway

The gateway provides a unified interface for the backend services.

Deployment

The whole source code can be found in the devel:kubic:ei-demo OBS project.

RPMs can be installed from the corresponding repositories below https://download.opensuse.org/repositories/devel:/kubic:/ei-demo/

For x86-64, the repository can be added with:

zypper ar -f https://download.opensuse.org/repositories/devel:/kubic:/ei-demo/openSUSE_Tumbleweed/ ei-demo

Next we need to install the yaml files:

zypper in elastic-inference-demo-k8s-yaml

or

transactional-update pkg in elastic-inference-demo-k8s-yaml

Now we only need to deploy with kubectl to the cluster:

kubectl apply -f /usr/share/k8s-yaml/ei-demo/base/elastic-inference.yaml

or with kustomize:

kustomize build /usr/share/k8s-yaml/ei-demo/base | kubectl apply -f -

Input Source

Now an input source needs to be configured.

Video

There are different sample input streams available:

kubectl apply -f /usr/share/k8s-yaml/ei-demo/samples/car/sample-car.yaml kubectl apply -f /usr/share/k8s-yaml/ei-demo/samples/face/sample-face.yaml kubectl apply -f /usr/share/k8s-yaml/ei-demo/samples/people/sample-people.yaml

The sample input streams can be deployed with kustomize, too:

kustomize build /usr/share/k8s-yaml/ei-demo/samples/car | kubectl apply -f - kustomize build /usr/share/k8s-yaml/ei-demo/samples/face | kubectl apply -f - kustomize build /usr/share/k8s-yaml/ei-demo/samples/people | kubectl apply -f -

Or all together at once:

kustomize build /usr/share/k8s-yaml/ei-demo/samples/all | kubectl apply -f -

Camera

It's also possible to use a camera as input source. Install the script to run the camera stream service container:

zypper in elastic-inference-camera-stream

and run it:

run-ei-camera-stream-service -q <kubernetes cluster address>

Options for run-ei-camera-stream-service

-v X: for /dev/videoX -t <type>: inference type, face, car or people -q <address>: External kubernetes cluster address -p <port>: By default, the queue service listens at port 31003

See run-ei-camera-stream-service -h for more options.

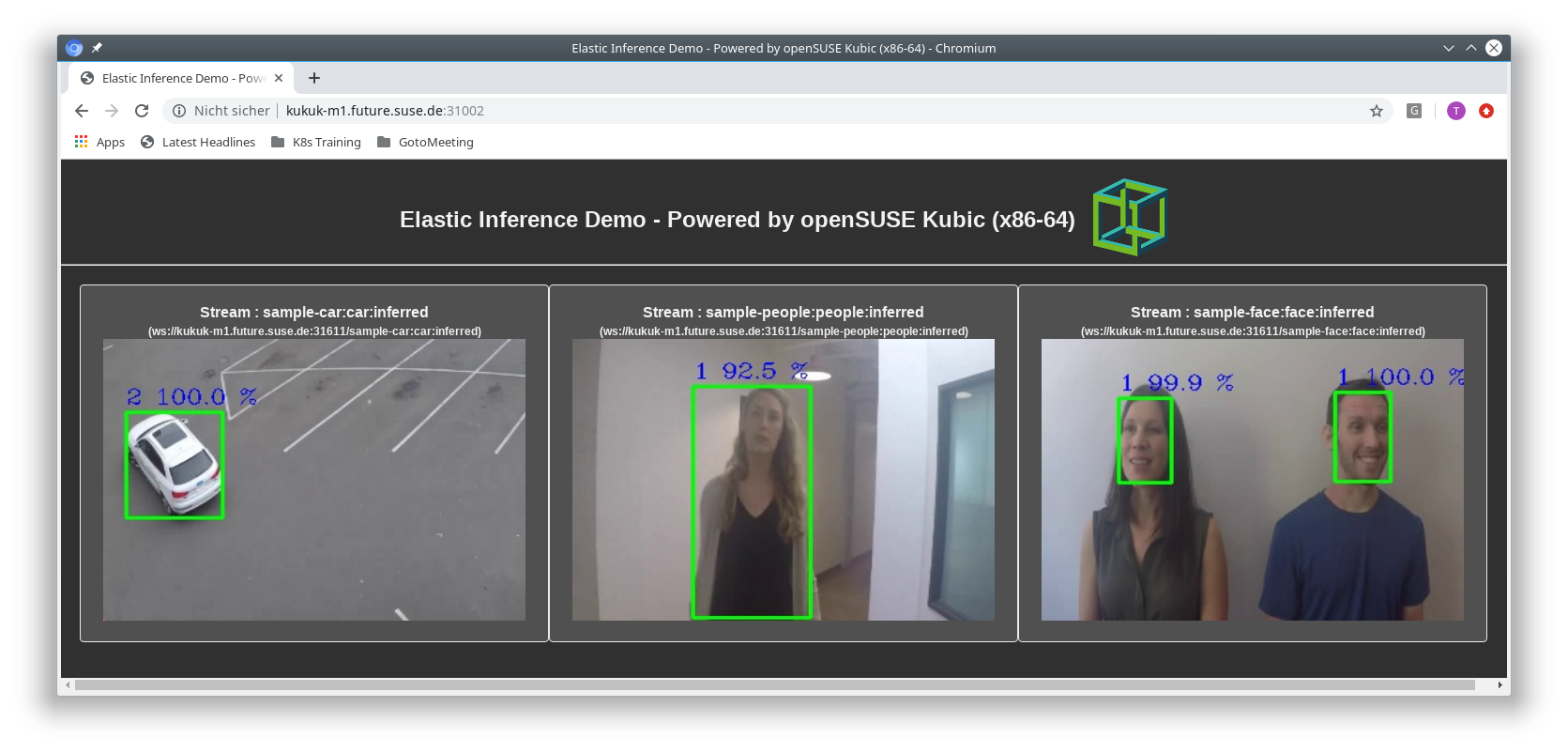

Output

By default

http://<k8s cluster IP>:31002

gives access to the Dashboard SPA web for result preview