SDB:Sound concepts

Linux sound systems

The sound drivers for openSUSE are typically provided by the ALSA packages. In most cases the sound configuration should be automatic upon booting.

OSS (Open Sound System)

OSS is a portable sound interface for Unix systems, mentioned here only for historic reasons.

In the case of the Linux kernel, the Open Sound System (OSS) was the only supported sound system used up to the 2.4.x series. It was created in 1992 by the Finn Hannu Savolainen (and later improvements to OSS were made proprietary). Starting with the Linux kernel version 2.5, ALSA, the Advanced Linux Sound Architecture was introduced, and the OSS interface became deprecated by Linux' authors. The audio output library that supports OSS for openSUSE is "libao".

Back in July 2007, the sources for OSS were released under CDDL for OpenSolaris and GPL for Linux.

Reference:

http://en.wikipedia.org/wiki/Open_Sound_System

It is now considered obsolete, although ALSA provides an optional in-kernel OSS emulation mode:

https://alsa.opensrc.org/OSS_emulation

ALSA (Advanced Linux Sound Architecture)

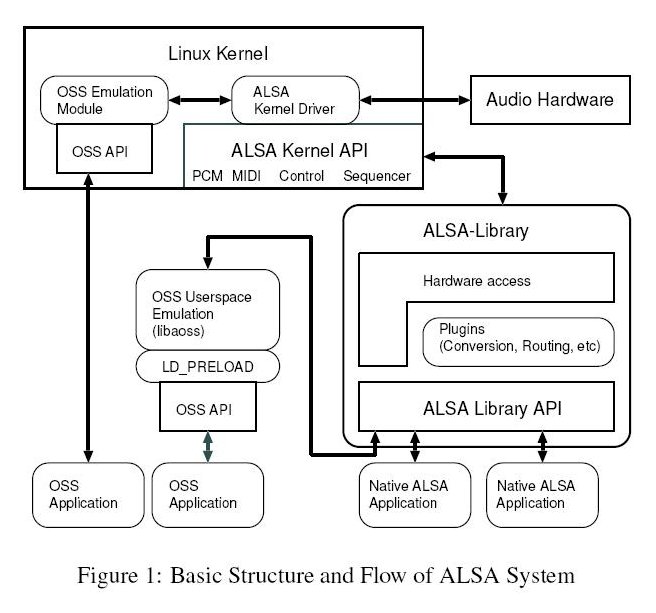

ALSA is the recommended interface for software that is intended to work on Linux only. It supports many PCI and ISA "plug and play" sound cards, providing both kernel hardware drivers for audio cards, and a library that makes accessible the ALSA application programming interface (API) for various software applications. For backward compatibility, ALSA also contains an optional OSS emulation mode that transparently appears to programs as if it was OSS. ALSA sound drivers are not part of the openSUSE kernel source code, but rather are compiled and linked to the kernel as modules. One feature of ALSA (not available in OSS) is ALSA allows device sharing by several applications.

- OSS: http://en.wikipedia.org/wiki/Open_Sound_System

- ALSA: http://en.wikipedia.org/wiki/Advanced_Linux_Sound_Architecture

- ALSA: http://en.wikipedia.org/wiki/ALSA_(Linux)

- ALSA Project page: http://www.alsa-project.org/

- Unofficial ALSA wiki: http://alsa.opensrc.org/

Multimedia interface with sound

Typically (but not always), a sound player will use either xine or gstreamer as its sound engine. Xine and gstreamer provide an interface in which media player developers can interface their applications to, without having to force their applications to go a layer deeper in the system sound interface.

GStreamer

GStreamer is a multimedia framework written in the C programming language with the type system based on GObject. The GNOME desktop environment is the primary Linux user of GStreamer technology. In the case of Gnome, PulseAudio or more recently PipeWire, handles sound server duties, while GStreamer handles the encoding and decoding. GStreamer serves a host of multimedia applications, such as video editors, streaming media broadcasters, and media players. Designed to be cross-platform, it is known to work on Linux (x86, PowerPC and ARM), Solaris (x86 and SPARC), Mac OS X, Microsoft Windows and OS/400. GStreamer uses a plugin architecture which makes most of GStreamer's functionality implemented as shared libraries. Plugin libraries are dynamically loaded to support a wide spectrum of codecs, container formats and input/output drivers.

More info: http://en.wikipedia.org/wiki/GStreamer

Phonon

KDE Plasma 5 uses the Phonon multimedia API. Phonon will provide a common interface on top of other backends, including the GStreamer and VLC backend libraries.

For more information refer to: http://en.wikipedia.org/wiki/Phonon_(KDE)

Phonon offers a consistent API for processing both audio and video within multimedia applications. The API is designed to be Qt-like, and as such, it offers KDE developers a familiar style of functionality. Firstly, it is important to state what Phonon is not: it is not a sound server. Rather, due to the ever-shifting nature of multimedia programming, it offers a consistent API that wraps around these other multimedia technologies. Then, for example, if GStreamer decided to alter its API, only Phonon needs to be adjusted, instead of each KDE application individually.

Phonon is powered by what the developers call "engines" and there is one engine for each supported backend.

Currently, the two main supported backends are GStreamer and VLC, represented by the following packages:

phonon4qt5-backend-gstreamer phonon4qt5-backend-vlc

There is a "phononsettings" GUI that allows one to configure the priority of these two backends as desired.

Linux sound servers or daemons

As many other programs in Linux the sound system is built up using a server-client solution. The sound server is the part responsible for the actual communication with the hardware, the sound card. To this sound server different clients could connect and request to send sound data to the sound card.

These sound clients could be ordinary programs like a mp3 player or a video player, but it could in some cases be a client running on another computer connected over the network. Supported sound servers:

- ALSA (with dmix plugin support)

- JACK

- PulseAudio

- PipeWire

dmix

dmix is part of ALSA, and not a separate system as such. It acts as an "indirection layer" and so it is included here. The dmix plugin extends the functionality of PCM devices allowing low-level sample conversions and copying between channels, files and soundcard devices. The dmix plugin provides for direct mixing of multiple streams, and is enabled by default.

More info: https://alsa.opensrc.org/Dmix

JACK

JACK is a low-latency audio server, written for POSIX conformant operating systems such as GNU/Linux and Apple's OS X. It can connect a number of different applications to an audio device, as well as allowing them to share audio between themselves. Its clients can run in their own processes (ie. as normal applications), or can they can run within the JACK server (ie. as a "plugin"). JACK relies on alsa to provide the kernel hardware drivers. JACK was designed from the ground up for professional audio work, and its design focuses on two key areas: synchronous execution of all clients, and low latency operation.

More info: http://jackit.sourceforge.net/

PulseAudio

PulseAudio is a networked sound server, similar in theory to the Enlightened Sound Daemon (EsounD). It provides:

- Software mixing of multiple audio streams, bypassing any restrictions the hardware has.

- Network transparency, allowing an application to play back or record audio on a different machine than the one it is running on.

- Sound API abstraction, alleviating the need for multiple backends in applications to handle the wide diversity of sound systems out there.

- Generic hardware abstraction, giving the possibility of doing things like individual volumes per application.

PulseAudio comes with many plugin modules. All audio from/to clients and audio interfaces goes through modules. PulseAudio clients can send audio to "sinks" and receive audio from "sources". A client can be GStreamer, VLC, or any other audio application. Only the device drivers/audio interfaces can be either sources or sinks (they are often hardware in- and out-puts).

More info:

PipreWire

Refer: